Hi. I’m Louis Kirsch.

I am a PhD with Jürgen Schmidhuber at IDSIA (The Swiss AI Lab) working on meta reinforcement learning. Previously, I completed my Master of Research at University College London. My long-term research goal is to create RL agents that learn their own learning algorithm, making them truly general in the AGI sense. They should be able to design their own abstractions for prediction, planning, and learning, and continuously improve across a wide range of environments.

Short CV

- 2022-2023: Research Intern at Google

- Summer 2021: Research Scientist Intern at DeepMind

- Currently PhD student with Jürgen Schmidhuber at IDSIA (The Swiss AI Lab).

- Graduated as the best student of class 2018 from University College London supervised by David Barber (MRes Computational Statistics and Machine Learning).

- Graduated as the best student of class 2017 from Hasso Plattner Institute

HPI is ranked 1st for computer science in most categories in Germany (CHE ranking 2015) - Self-employed software developer during high school and my undergraduate studies [Project selection]

News

12/2022 When neural networks implement general-purpose in-context learners

Emergent in-context learning with Transformers is exciting! But what is necessary to make neural nets implement general-purpose in-context learning? 2^14 tasks, a large model + memory, and initial memorization to aid generalization.

— Louis Kirsch (@LouisKirschAI) December 9, 2022

Full paper https://t.co/yyp9467WgF

🧵👇(1/9) pic.twitter.com/3GlU9M1MCR

7/2022 My invited talk at ICML DARL 2022 covers how we ca learn how to learn without any human-engineered meta-optimization

What would it take for algorithms to invent our future reinforcement learning algorithms?

— Louis Kirsch (@LouisKirschAI) July 22, 2022

In my invited talk at @darl_icml, I take you on a journey of meta-learning general-purpose learning algorithms.

At #ICML2022 Hall G 6:10pm

Work w/ @SchmidhuberAI @DeepMind and many others pic.twitter.com/dRh8uZD7em

02/2022 Our work at DeepMind on Introducing Symmetries to Black Box Meta Reinforcement Learning will appear at AAAI 2022!

10/2021 My work on Variable Shared Meta Learning (VSML) will appear at NeurIPS 2021!

Excited to share our preprint 'Meta Learning Backpropagation And Improving It'

— Louis Kirsch (@LouisKirschAI) February 26, 2021

VS-ML meta learns general purpose learning algos that start to rival backprop in their performance. This is achieved purely by weight sharing LSTMs - no grads required. https://t.co/IKSG0TVAsO

👇1/7 pic.twitter.com/6Olb0kmhnW

09/2021 I collaborated with some great people at DeepMind on general-purpose Meta Learning during an internship.

Our paper on Introducing Symmetries to Black Box Meta Reinforcement Learning.

How to meta-learn general-purpose RL algorithms that don't need any gradients?

— Louis Kirsch (@LouisKirschAI) September 23, 2021

Black-box methods (eg RL^2) tend to overfit.

We identify symmetries in backpropagation-based meta RL and apply them to black-box meta RL.

This improves generalization.https://t.co/7JLgB8zsLH

🧵1/8 pic.twitter.com/4hauGp5XZe

12/2020 I am an invited speaker at Meta Learn @ NeurIPS 2020.

Join me on Dec 11th 16:00 UTC online and learn more about my newest work on General Meta Learning.

Excited about my first invited talk @ Meta Learn NeurIPS 2020 on #MetaLearning general-purpose Learning Algorithms.

— Louis Kirsch (@LouisKirschAI) December 11, 2020

Includes #MetaGenRL and new work on #VSML. Unifies learned learning rules and learning in activations. Can learn to backprop.

16:00 UTChttps://t.co/hHs8ad3w5f

10/2020 I have been awarded a total of 550 thousand GPU compute hours on the Swiss National Supercomputer.

Huge thanks to CSCS for making exciting new Meta Learning research possible!

12/2019 My first work on meta-learning RL algorithms has been accepted at ICLR 2020 including a spotlight talk!

Arxiv link: Improving Generalization in Meta Reinforcement Learning using Learned Objectives

Read more about it in my blog post

Recent publications

A complete list can be found on Google scholar.

-

General-Purpose In-Context Learning by Meta-Learning Transformers [ArXiv]

Preprint. Internship project at Google with James Harrison, Jascha Sohl-Dickstein, and Luke Metz -

Eliminating Meta Optimization Through Self-Referential Meta Learning [ArXiv]

Workshop paper at ICML and AutoML 2022 -

Introducing Symmetries to Black Box Meta Reinforcement Learning [ArXiv]

Conference paper at AAAI 2022

Internship project at DeepMind with Sebastian Flennerhag, Hado van Hasselt, Abram Friesen, Junhyuk Oh, Yutian Chen -

Meta Learning Backpropagation And Improving It [Blog] [ArXiv]

Workshop paper at NeurIPS Meta Learn 2020, (Kirsch and Schmidhuber 2020)

Conference paper at NeurIPS 2021 -

Improving Generalization in Meta Reinforcement Learning using Learned Objectives [Blog][PDF]

Conference paper at ICLR 2020, preprint on ArXiv (Kirsch et al. 2019) -

Modular Networks: Learning to Decompose Neural Computation [PDF]

Conference paper at NIPS 2018 (Kirsch et al. 2018) -

Transfer Learning for Speech Recognition on a Budget [More]

Workshop paper at ACL 2017 (Kunze and Kirsch et al. 2017) -

Framework for Exploring and Understanding Multivariate Correlations

Demo track paper at ECML PKDD 2017 (Kirsch et al. 2017)

Recent blog posts

-

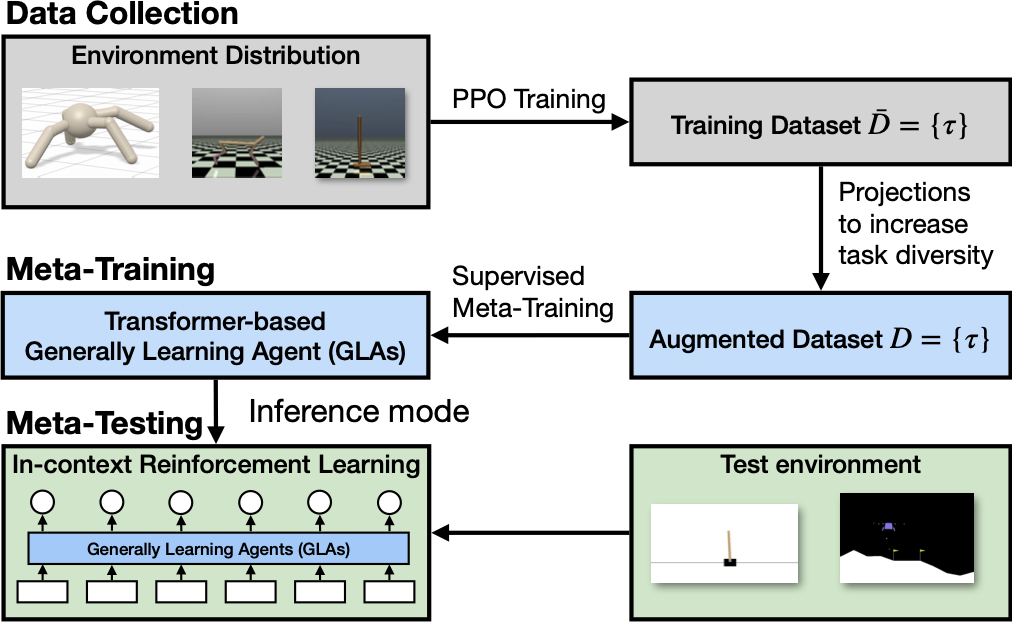

Towards General-Purpose In-Context Learning Agents

We meta-train in-context learning RL agents that generalize across domains (with different actuators, observations, dynamics, and dimensionalities) using supervised learning. [Continue reading]

-

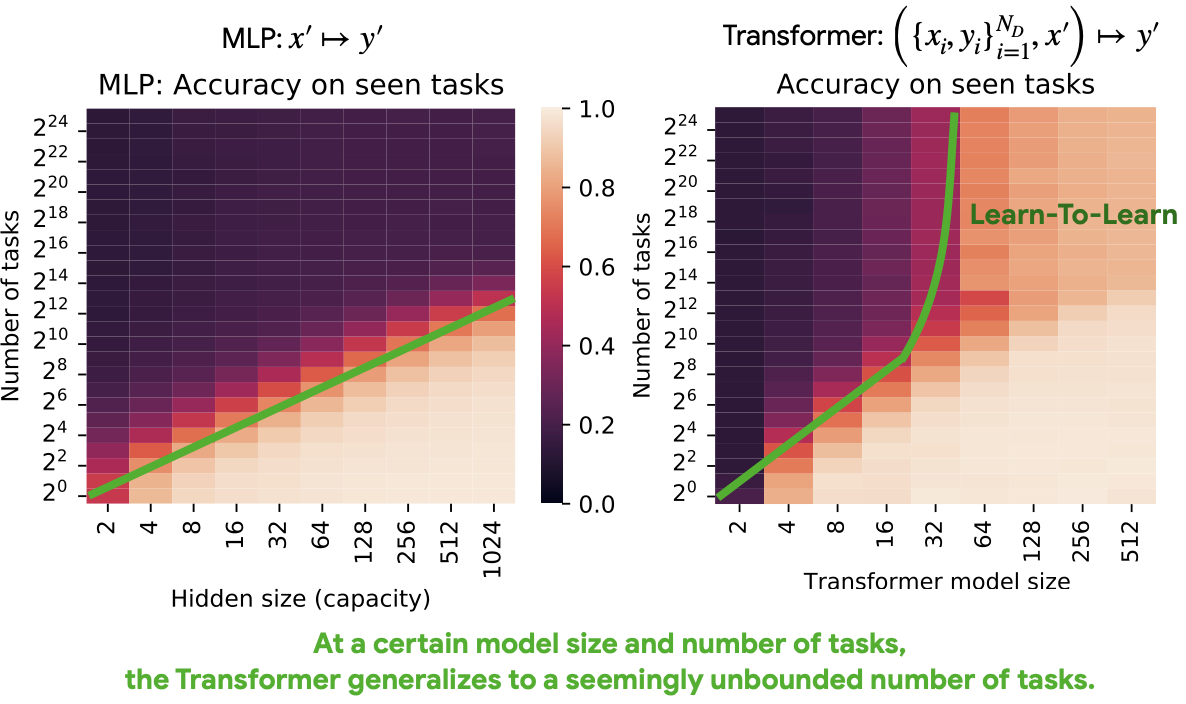

General-Purpose In-Context Learning

Transformers and other black-box models can exhibit in-context learning-to-learn that generalizes to significantly different datasets while undergoing multiple phase transitions in terms of their learning behavior. [Continue reading]

subscribe via RSS

Follow @LouisKirschAI